A bit represents one of the two possible states, 0 and 1

We can simply say, the output of a Flip-Flop is a Bit

Also Btw for every N Bits we can have 2^N Values

On a High level all Computations can be represented as a Data Transfer, like we start with an input data and through some computational transform we produce, some output data

Like A -- XForm --> B, this is an high level that applied in general to everything computers do, here is A and B are the data stored in memory and that’s why we care about memory management

Now to understand this flow for example,

If you’re a game dev then, Game Level State — “Render” ⇒ Framework Pixel Data

If you’re a game play dev then, Game State at t=0 — “Tick” ⇒ Game State at t=1

If you’re a frontend dev then, Application State — “UI Build” ⇒ UI hierarchy

If you’re a compiler dev then, Text — “Parse” ⇒ Abstract Syntax Tree

If this above 4 different applications can be run on the same machine, and everything runs fine until and unless we manage the memory carefully such that conflicts won’t occur

Generally we see that, a set of addresses will get stored in memory but it’s kind of more complex than that as the ordering wrt address and storage is not linear, It’s random. As an address in Virtual Address Space can go into a completely different Physical Memory location. And we have different virtual addresses wrt the application and they get stored separately in some parts of the physical memory, which is managed by the OS. The operating system, then, manages a mapping data structure, called a Page Table, which maps Virtual Addresses into Physical Addresses

Q Why not just directly dump the data into Physical Memory, why do we go through Virtual Address Spaces?

A For example, if we take a code:

u64 *ptr = (u64*)0x100;

*ptr = 1234;Here the data is not actually stored at virtual address 0x100, rather it will be translated by the Memory Management Unit (MMU) during runtime when accessed and mapped to a physical address in RAM through page tables. The conclusion is Memory addressing is dynamic through virtual-to-physical translation and cannot be statically predetermined at compile time

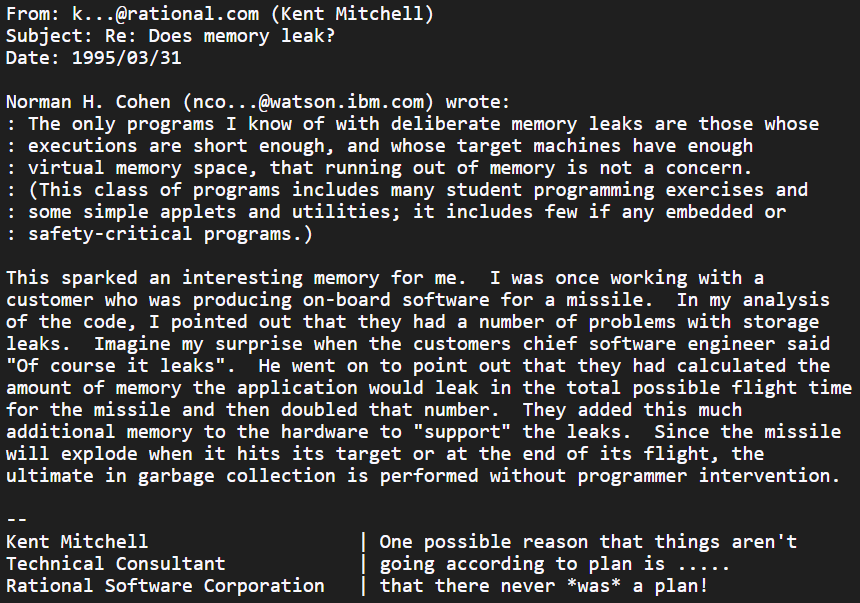

Note: Super Interesting example of null garbage collector

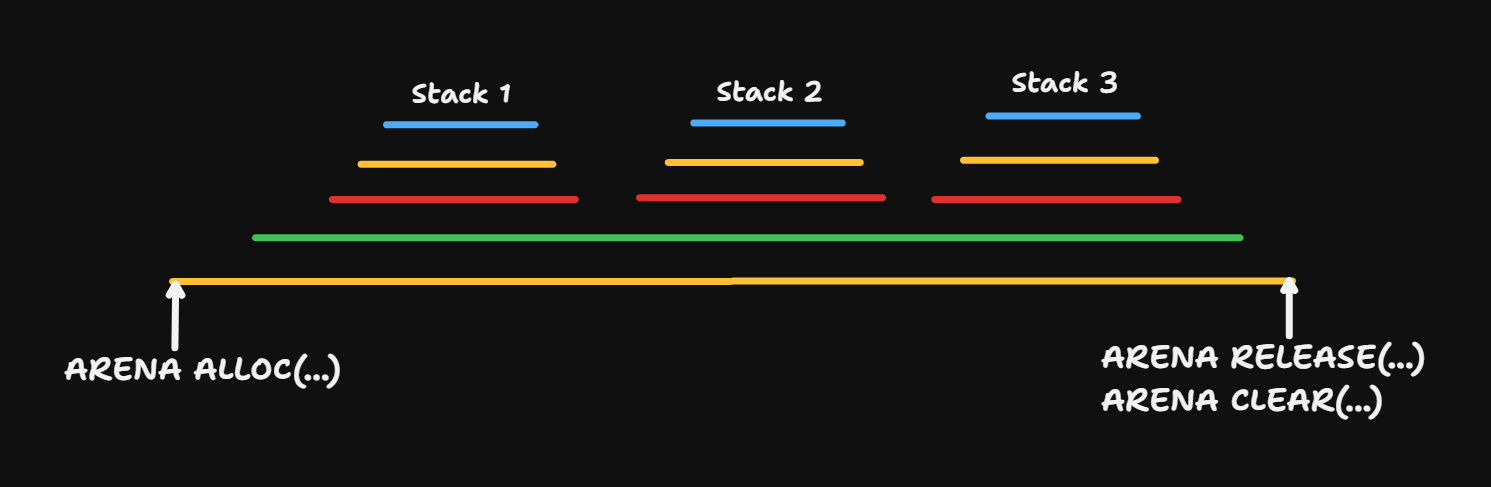

Ok now, everything kind of looks good wrt making data get stored into memory but like, we have data going into memory and it’s stays there even if we won’t use it, but the good part about memory is we can reuse it, thus we can use ALLOC function which allocates and have an subsequent RELEASE function which frees the memory and the time between ALLOC and RELEASE is called as Lifetime

STRING8 FORMATSTRINGALLOC(....)

void STRING8RELEASE(STRING8)Looks good, problem of bulking memory with unused data gets solved by this, but when used this pattern everywhere, causes data getting expired via lifetimes even when they needed by other lifetimes leading to constant maintenance

One thing that we can do it batching all the ALLOC implementations, like put them all into stack and release them all

u32 *x_ptr = (u32*) stack_top;

stack_top += sizeof(u32) * 256; here we are pushing the address in the stack and release all them once when we are done, another optimization we get by this is, as the allocation is managed by the OS, the work done to free the memory is once per thread, other than once per ALLOC

Ok looks good but can 1 stack can cause problems, thus we can use N Stacks to solve the problem. To use it we use a concept called Arena Allocator - It’s a linear Allocator used to bundle Lifetimes, It can holds multiple stacks in it, used to coordinate between them, One Arena = One Lifetime Tree

We can just allocate Arenas to threads

We can just allocate Arenas to threads

Q What if arena Stack fills up ?

A Well we have 5 options

- Panic - Not the best option, but works

- Create a new stack and connect the old one with it. Classic Linked List 101

- Create a new stack with Larger size and move the existing elements into it

- Use Infinite self growing Array - Classic Solution, but sucks

- Use Segmented Stacks with Stacklet Allocation - Allocate new stack segments (stacklets) as needed and link them together

To Implement this we use Thread Local Scratching Arenas, like we use threads to handle the lifetimes

Q Why we use Thread Local Scratching Arenas, when we can just use stacks ?

A When we use Stacks then a particular code path now applies to any lifetime represented by any Arena, thus leading to conflict Arenas

Q How can we free memory in middle, for example in games we need to spawn something and free it, how can we do that ?

A We maintain a Free List, It points to all the empty memory in the Array of the Lifetime, when we need to remove something we replace the head of that memory to the free list, when we need to add something we add it into free list. If the free list is empty then we increase the size of the Arena

Q The push and pop operator on the current arena implementation aren’t atomic, which mean they aren’t thread-safe. How do you go about dealing with memory management for multithreading ?

A The arena push and pop operations are not intended to be used by multiple threads by themselves. The arena, like the stack, is a useful pattern when accessed by a single thread at a time, so either in a single-threaded context, or in some interlocked shared data structure. In general, threads should try to synchronize as little as possible, and so they will often be operating on different arenas altogether. For communication between threads, an arena is not really the correct “shape” in most cases - another primitive, like a ring buffer, is much more suitable. In other words, the arena implementation is not the right layer to solve for multithreading.

Q What is a Memory Leak ?

A what happens when you never call free with a pointer that was returned to you by malloc? The first obvious point is that—of course—the malloc/free allocator fails to ever see the pointer again, and so it assumes it is still “allocated” by the usage code, which means that memory can never be reused again for another malloc allocation. That is what is called a Memory Leak. On the program exiting or crashing, no resources are truly “leaked”

Now, to clarify, a leak may actually be a problem. For instance, if you’re building a program that runs “forever” to interact with the user through a graphical user interface, and on every frame, you call malloc several times and never free the memory you allocate, your program will leak some number of bytes per frame. Considering that—at least in dynamic scenarios—your program will be chugging through a frame around 60, 120, 144 times per second, that leak will likely add up fairly quickly. It’s possible that such a leak would prohibit normal usage of the program—for instance, after 30 minutes, an allocation failure occurs, or the operating system spends far too much time paging in memory from disk to allow your program’s memory usage to continuously grow

Note: A Memory leak is very often not a problem—for example, when allocating memory that needs to be allocated for the duration of the program, or if you expect the program to only boot up and perform a task, then to close. Simply, if a program creates and dies, no need to free up the memory as people often say to cleaning up code is always necessary, even if we do in such circumstances then it is loss as OS need to perform continuous clean up, that waste the resources

Classic example being Null Garbage Collector example above

What did we achieve from all of the above things ?

- Dynamically allocating memory is now ~free on average

- Releasing dynamically-allocated memory is now ~free

- Releasing memory is a per-lifetime concern, not a per-allocation concern

- (99% of code can now allocate & forget, by picking an arena, a named “null garbage collector”)

- Arenas can be used for traditionally stack-allocated cases, and traditionally heap-allocated cases

- Helper & auxiliary functions now can work for both sets of problems

- Diagnostics, logging, visualization for all allocations can be implemented in a single, simple layer easily controlled by you

- Allocations are already bucketed to a unique ID – the arena pointer itself!

- No need to be dependent on complex tools & languages

- If not, we can use arena-style techniques when the underlying abstractions miss